Contents

In this article we will delve into the installation and configuration of Microsoft’s innovative virtualization platform, Azure Stack HCI, specifically version 23H2.

For demonstration purposes, I will be deploying 23H2 into my lab, on virtualized hardware.

This release is the newest iteration of Microsoft’s hyper-converged infrastructure solution, designed to streamline operations, increase efficiency, and reduce costs. Azure Stack HCI is a infrastructure platform that integrates with the Azure ecosystem, providing a robust and flexible platform that allows businesses to run virtual machines and containers on-premises.

An interesting new features 23H2 allows for on premises Azure Stack HCI clusters to be deployed and managed directly from Azure. We’ll explore more of this later in this post, but first we’ll start with the basic deployment steps to get us started.

To ensure you’re not mis-led, the official Microsoft documentation installing HCI are quite good, they are very thorough but they do take a very long time to go through. This guide is if you need a fast track to getting a basic lab up and running. At times these instructions may not be compatible with your environment, in which case, review the official documentation for those scenarios

Prerequisites

Choose a supported deployment topology

- In this demonstration, I will be using a two node deployment (with switch)

Procure your supported hardware

A note on using Virtual Machines

- For this demonstration I will be using virtual machines as my HCI nodes. This is obviously an unsupported deployment, but useful to explore the solution in a non-production environment, taking advantage of the generous 60 day free trial.

- Ensure your VMs are of a specification under the supported hardware guidance.

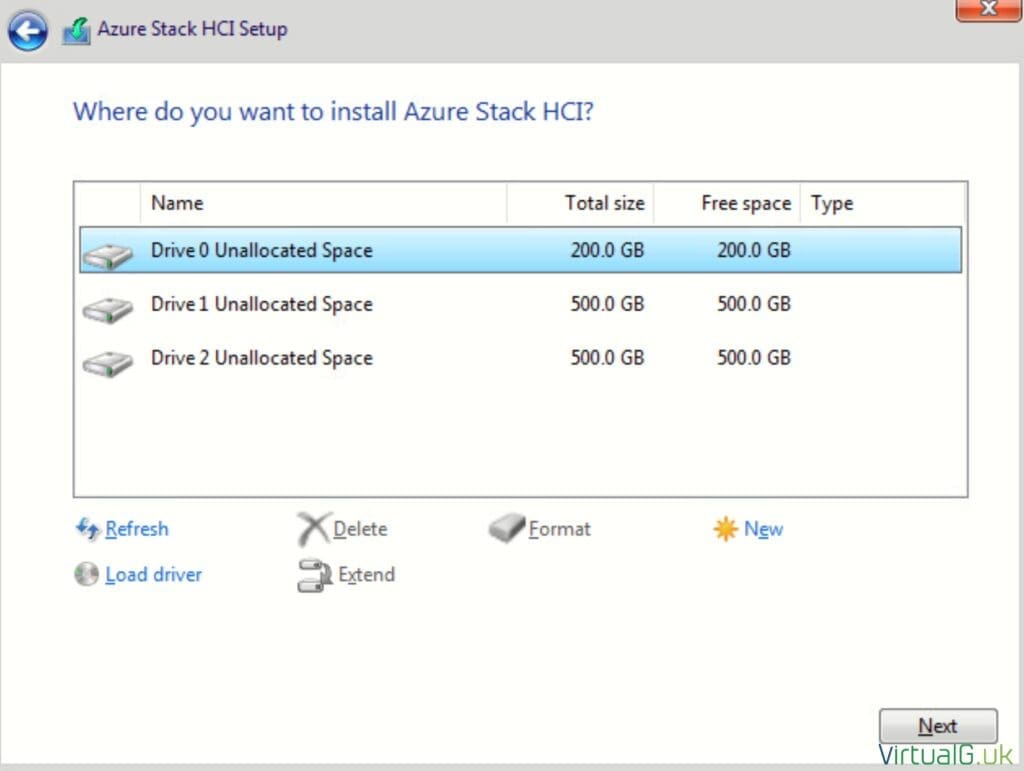

- Storage Thin provisioned disks are fine. I used 1x 200GB disk for my OS and 2x 500GB disks per VM for the S2D storage. Ideally place your S2D disks on a dedicated disk controller, but it’s not a major issue is you’re just demoing the solution.

- NICs If using vSphere/VCF/ESXi as your hypervisor for the virtual machines, use VMXNET3 virtual NICs.

- VMware Tools VMware Tools needs to be installed if using VMXNET3 network adapters. I added one NIC for Management/Compute and a second dedicated for Storage.

- vSwitch: I made sure the NICs for storage were connected to a portgroup with a VLAN ID setting of “All (4095)”

Active Directory Preparation

Before we begin installing the HCI OS on our servers, we need to prepare Active Directory.

Since these instructions are subject to change for newer builds of 23H2, this is the official Microsoft guidance for AD preparation: Prepare Active Directory for new Azure Stack HCI, version 23H2 deployment – Azure Stack HCI | Microsoft Learn

- Follow the Prepare Active Directory section of the guidance via the link above

Example commands for my lab environment

- Adjust the following sample commands where required:

Install-Module -Name AsHciADArtifactsPreCreationToolNew-HciAdObjectsPreCreation -AzureStackLCMUserCredential (Get-Credential) -AsHciOUName "OU=HCI,OU=Lab,DC=Lab,DC=local"- When prompted for a credential, specify a new user you would like the tool to create for administrating your Azure Stack HCI cluster. In my lab I specified HCI and provided a compatible password: “at least 12 characters long and contains a lowercase character, an uppercase character, a numeral, and a special character.”

- Ensure the new OU is created in Active Directory

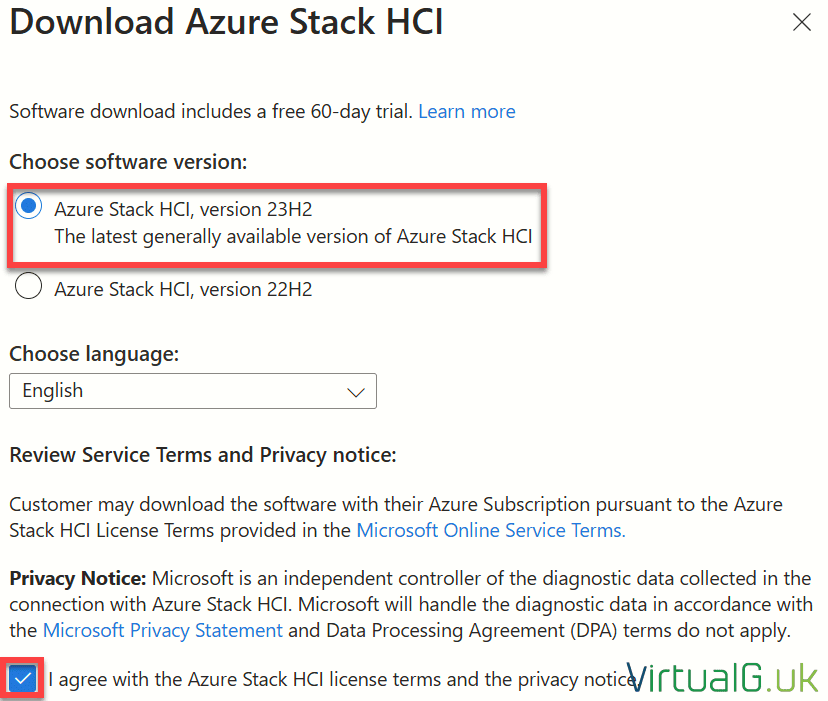

Download Azure Stack HCI from Azure

Assuming you have met all the prerequisites and procured your supported hardware, next we need to download the latest Azure Stack HCI image from the Microsoft Azure Portal.

- Login to the Azure Portal and search for Azure Stack HCI

- Select the Download Azure Stack HCI option

- Select the latest version, your preferred language and accept the license terms:

Select Download Azure Stack HCI.

By default Azure Stack HCI comes with a generous 60 day trial.

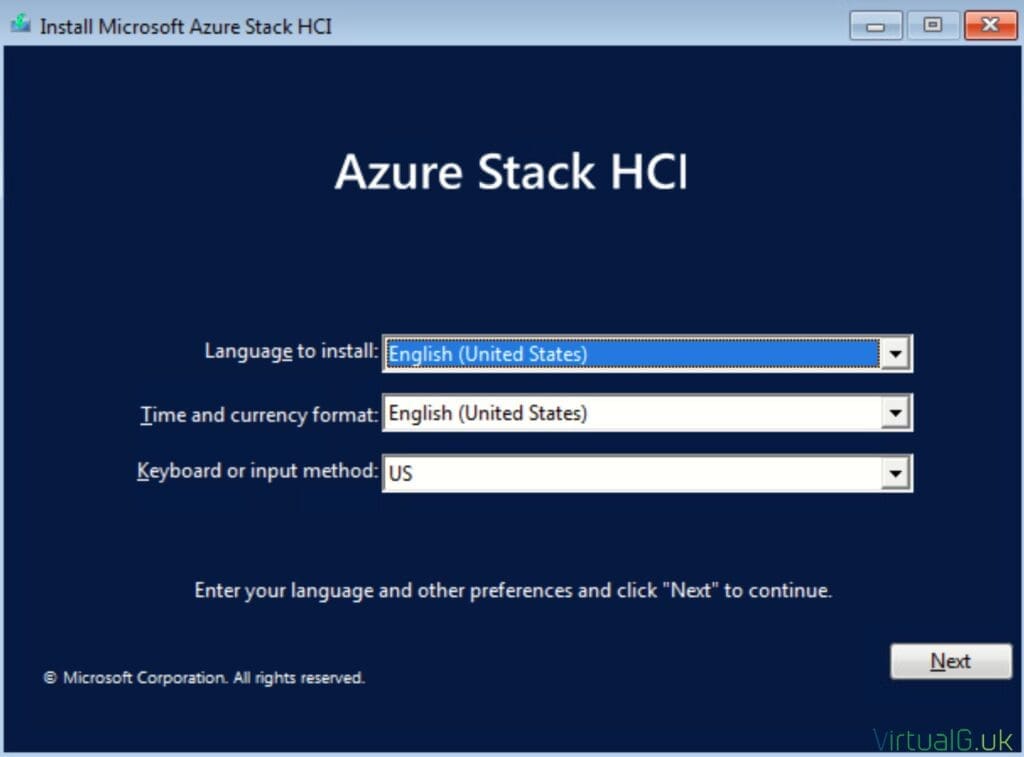

Install the Azure Stack HCI Operating System

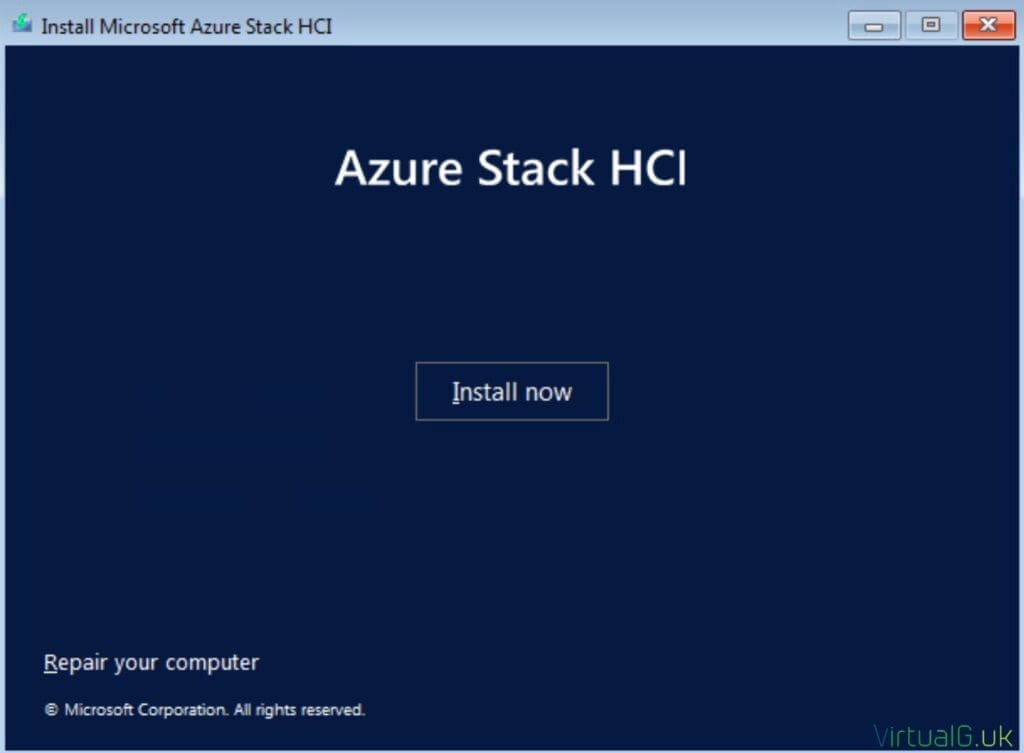

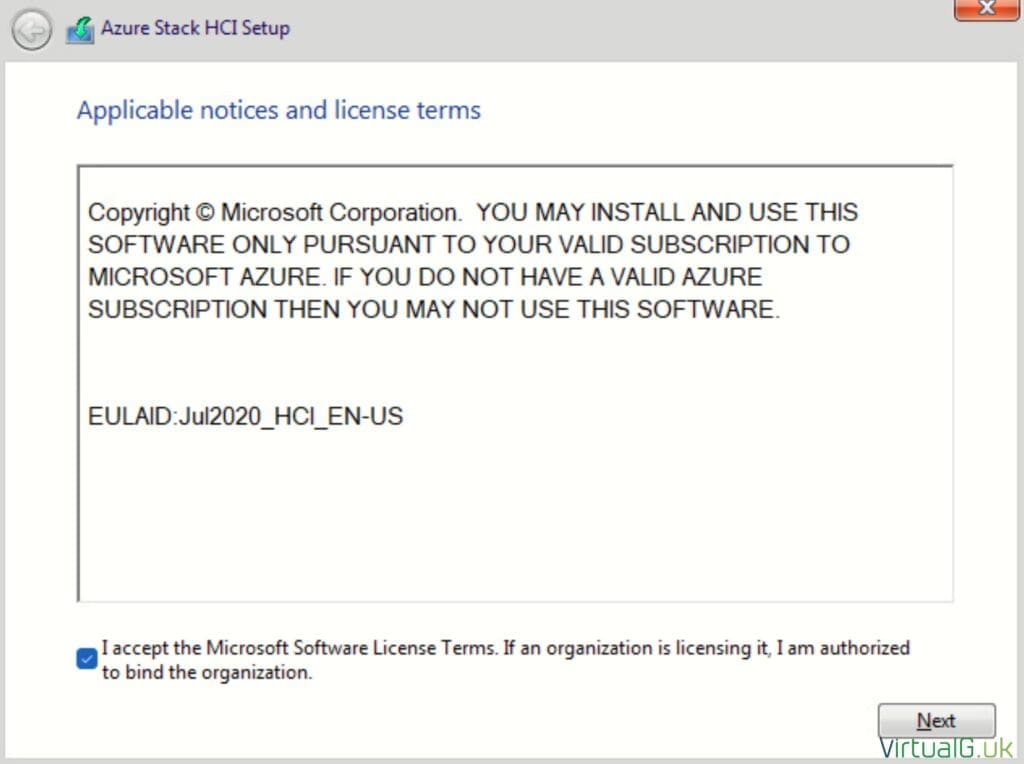

After mounting the ISO to each of your servers, follow the simple on-screen wizard:

- Select Language, Time and Keyboard settings

- Install Now

- Accept the Terms and Conditions

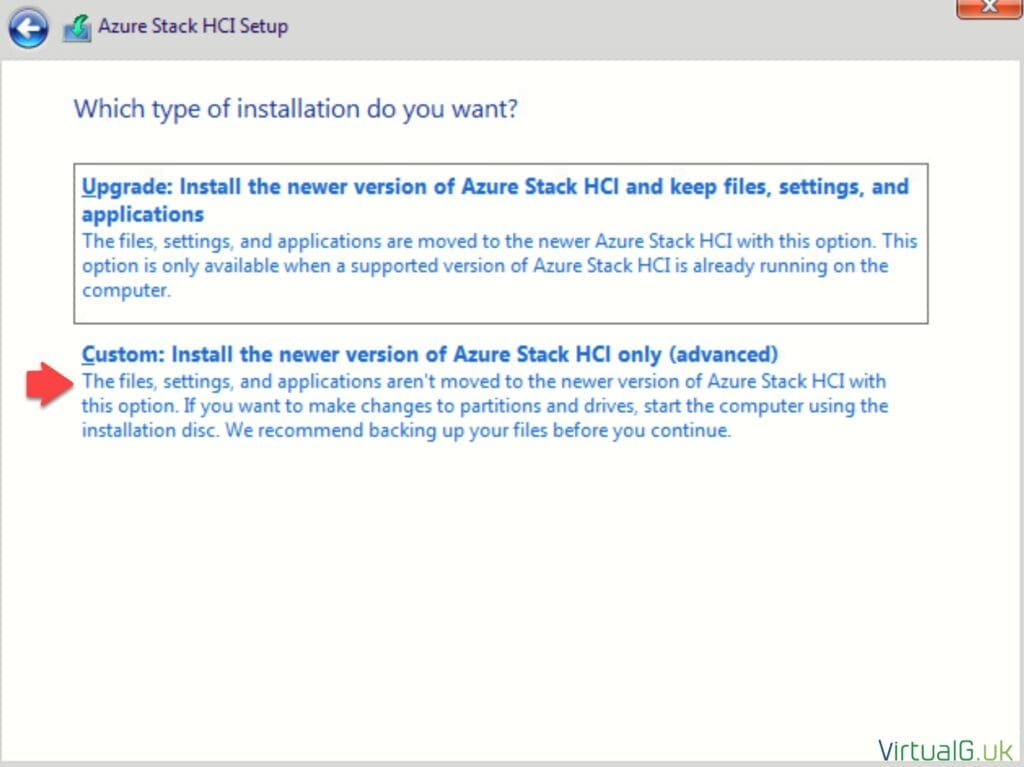

- Select “Custom: Install the newer version of Azure Stack HCI only (advanced)”

- Select the OS drive, recall from the supported hardware page that this must be at least 200GB in size

- Wait for the installation to complete

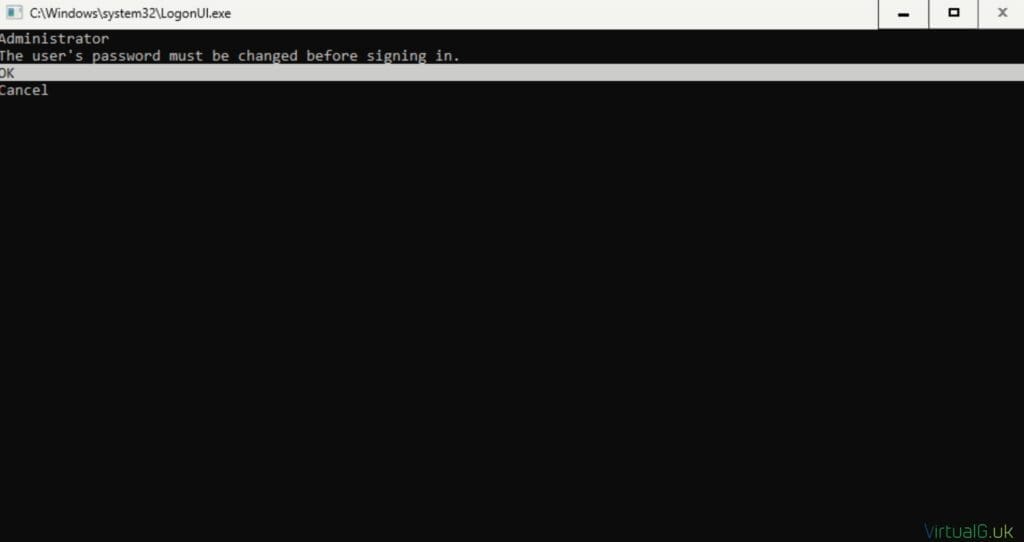

- Once the OS is installed and the server has automatically rebooted, you’ll be presented with a logon screen, similar to a Windows Server core system:

- Press CTRL ALT DEL and enter a new, secure complex password for the system as per the Azure Stack HCI requirements: “at least 12 characters long and contains a lowercase character, an uppercase character, a numeral, and a special character.”

- The administrator password should be configured the same for all HCI servers.

- Install your server vendors latest drivers and firmware for the HCI OS and reboot the server.

- If running virtual machines on vSphere/VCP/ESXi, install VMware Tools:

- Mount VMware tools installer via ESXi/vCenter Server

- Run D:\setup.exe

- The Installer may launch behind the PowerShell window

- Reboot once prompted.

- Configure the physical networking as per your prior topology choice.

- Set IP address, Subnet Mask, Gateway and DNS

- Or providing your servers have been allocated a DHCP address with a working DNS server and a gateway address, usually there is no need to configure a static IP address at this stage. However I would advise setting one, or at least creating DHCP reservations so that the IP does not change in the middle of deployment.

- You should also refrain from joining your servers to your domain or performing windows updates at this time.

- You must remove all installation media (Eject CD/DVDs) from the HCI servers before continuing.

- If you fail to eject all HCI installation media, you’ll encounter the error: AzStackHci_Hardware_Test_MountedMedia_Exists

Setup your time configuration:

- Login to each server and launch sconfig.

- Open PowerShell (Option 15)

w32tm /config /manualpeerlist:"ntp.lab.local" /syncfromflags:manual /update- Confirm connect time settings with:

w32tm /query /status

date- Enable RDP via sconfig (Option 7)

- Change the computer name of the servers and reboot (Option 2)

- Next install the Hyper-V role on all servers:

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Hyper-V -All- Ensure all installation media is removed from the servers.

- Reboot the servers when prompted.

Azure Configuration

- On your management system, install the Az Powershell module

- Register the following resource providers. You must follow these official instructions to do this via the Azure portal, PowerShell or Azure CLI.

- Microsoft.HybridCompute

- Microsoft.GuestConfiguration

- Microsoft.HybridConnectivity

- Microsoft.AzureStackHCI

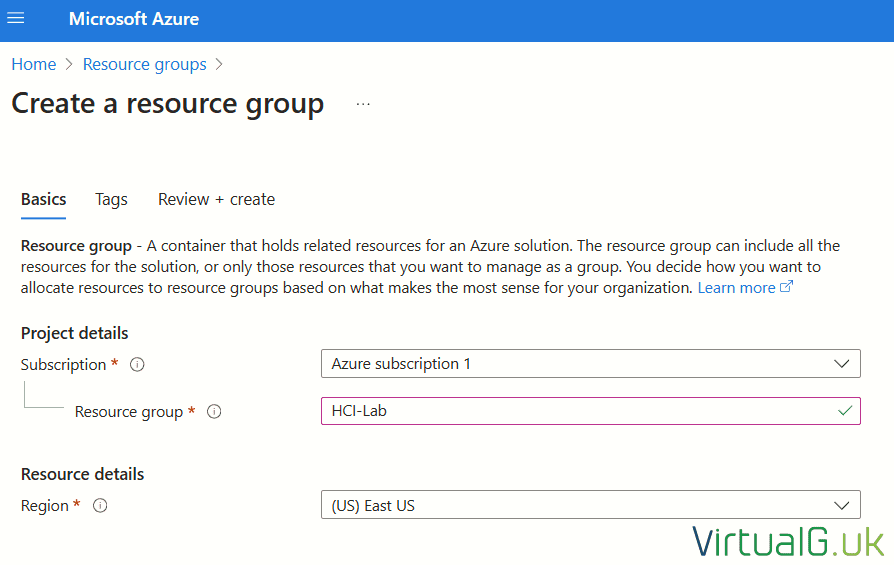

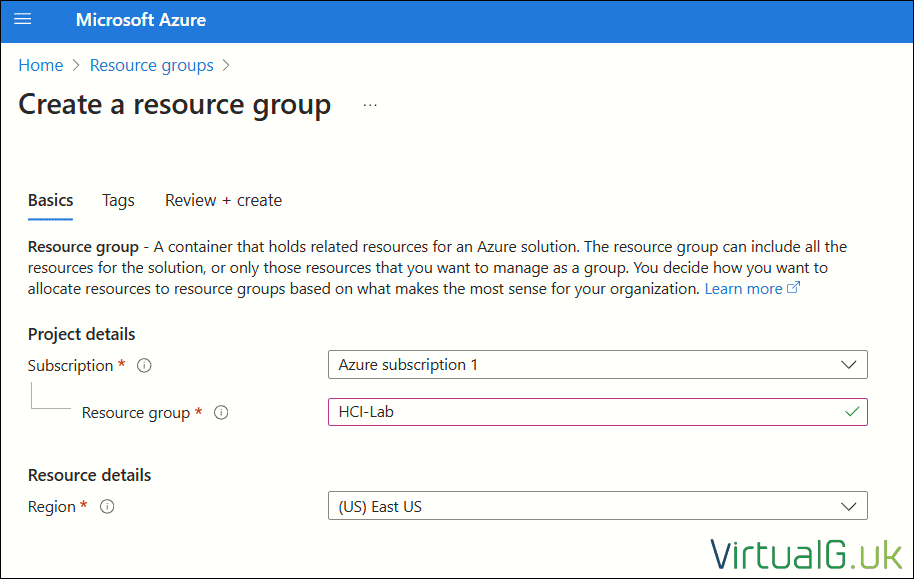

- Create a resource group

Register the HCI servers with Azure Arc

- On each of your HCI servers follow the official instructions to: register them with Azure Arc

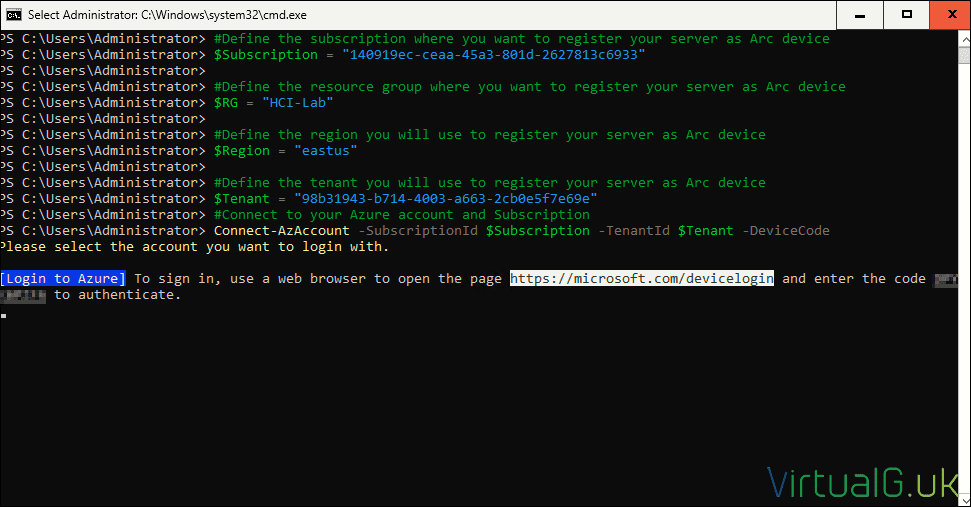

In my lab, I used the following PowerShell to register my HCI servers with Azure Arc:

- Install Modules

Install-Module Az.Accounts -RequiredVersion 3.0.4

Install-Module Az.Resources -RequiredVersion 6.12.0

Install-Module Az.ConnectedMachine -RequiredVersion 0.8.0

Install-Module AzsHCI.ARCinstaller

- Set the following PowerShell variables

$Subscription = “YourSubscriptionID”

$RG = “YourResourceGroupName”

$Region = “eastus”

$Tenant = “YourTenantID”

#Optional

$ProxyServer = “http://proxyaddress:port”

Connect to Azure

- Connect to your Azure account and Subscription

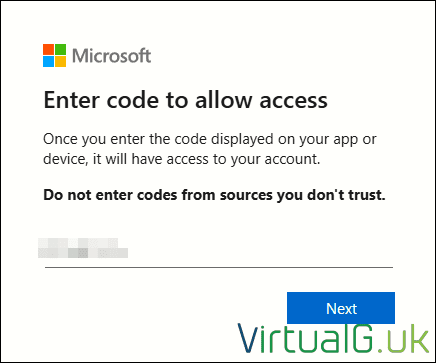

Connect-AzAccount -SubscriptionId $Subscription -TenantId $Tenant -DeviceCode- Follow the prompt to authenticate with a device code via your management server browser:

- Get the Access Token for the registration

$ARMtoken = (Get-AzAccessToken).Token

If you have issues obtaining the access token, consider setting the EnableLoginByWanm to false:

Update-AzConfig -EnableLoginByWam $false- Get the Account ID for the registration

$id = (Get-AzContext).Account.Id

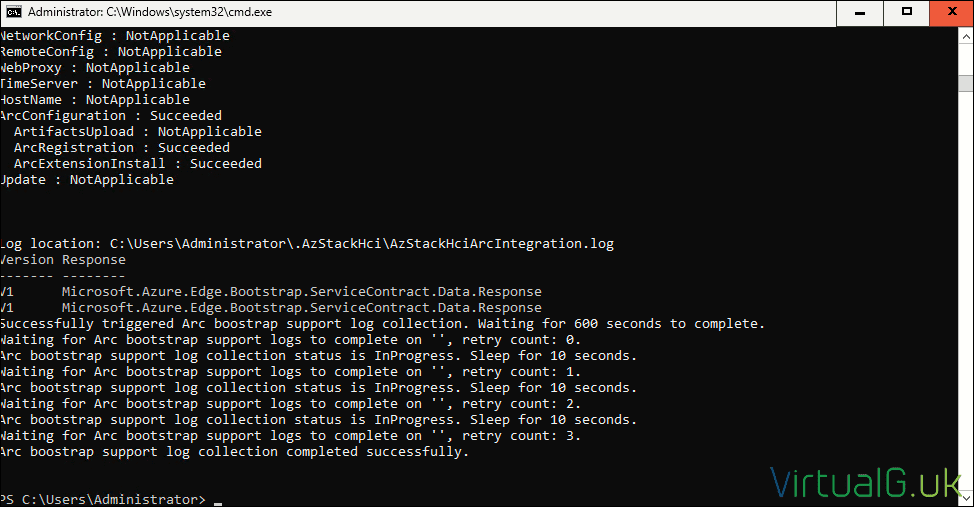

- Ensure the Arc Registration module is installed, then run the Arc Installation script:

Invoke-AzStackHciArcInitialization -SubscriptionID $Subscription -ResourceGroup $RG -TenantID $Tenant -Region $Region -Cloud "AzureCloud" -ArmAccessToken $ARMtoken -AccountID $id- If you are using a proxy server, then instead run:

Invoke-AzStackHciArcInitialization -SubscriptionID $Subscription -ResourceGroup $RG -TenantID $Tenant -Region $Region -Cloud "AzureCloud" -ArmAccessToken $ARMtoken -AccountID $id -Proxy $ProxyServerThis will take some time to complete

- Once the above finally completes on all servers, you should see them listed under the specified Azure resource group

Setup Azure Permissions

- This section will depend on how your subscription is setup, you should follow this guide to correctly configure your Azure subscription permissions.

Before proceeding, double check that you have completely setup your role assignments.

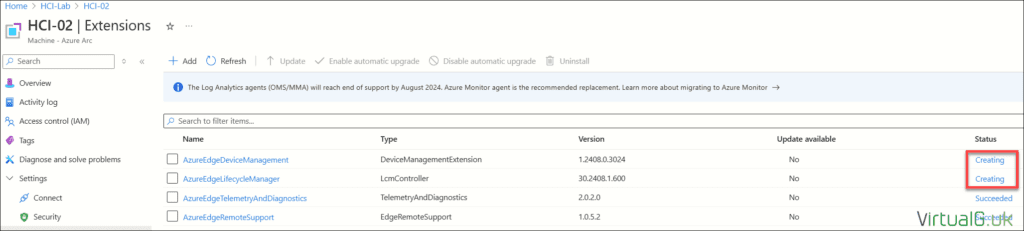

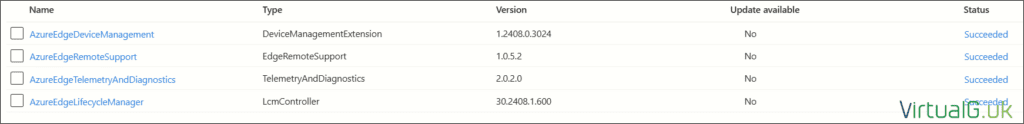

Also ensure the extensions on each HCI server within the resource group are fully created. In my example below, they are still creating:

Deploying the Cluster from the Azure Portal

Now that Azure and the HCI servers are all configured, we can begin the “fun” part of the deployment process.

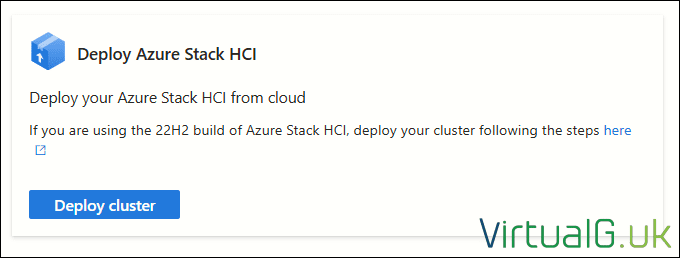

Within the Azure Portal, navigate to Azure Stack HCI and select Deploy Cluster:

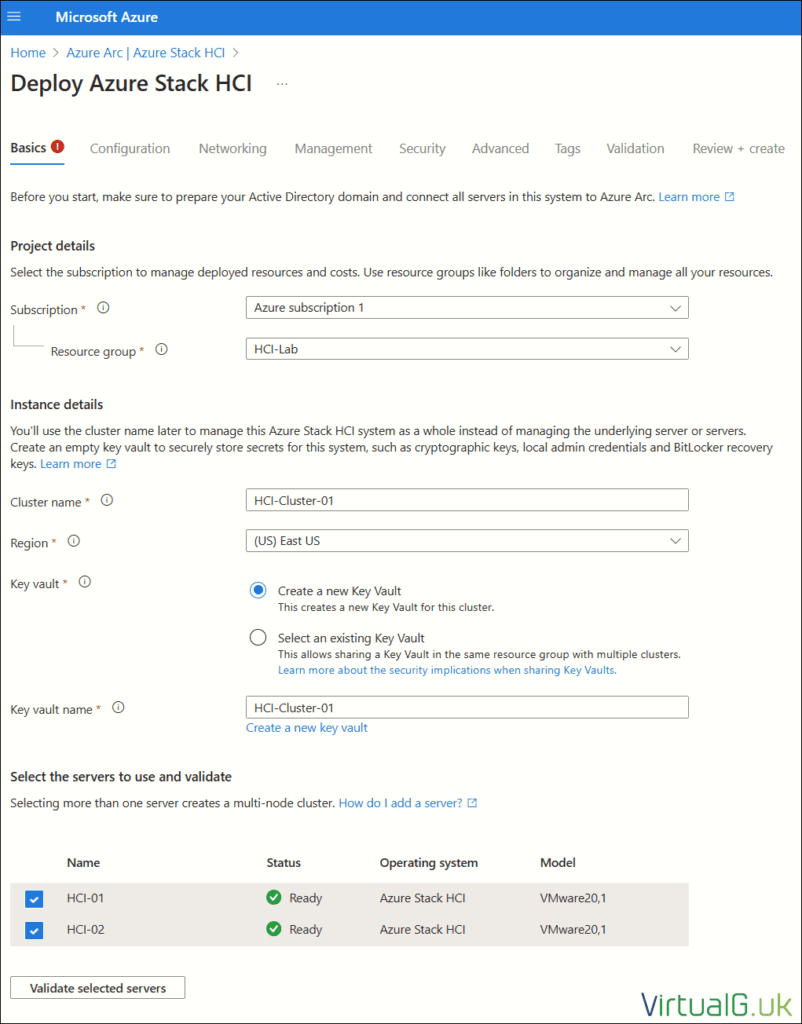

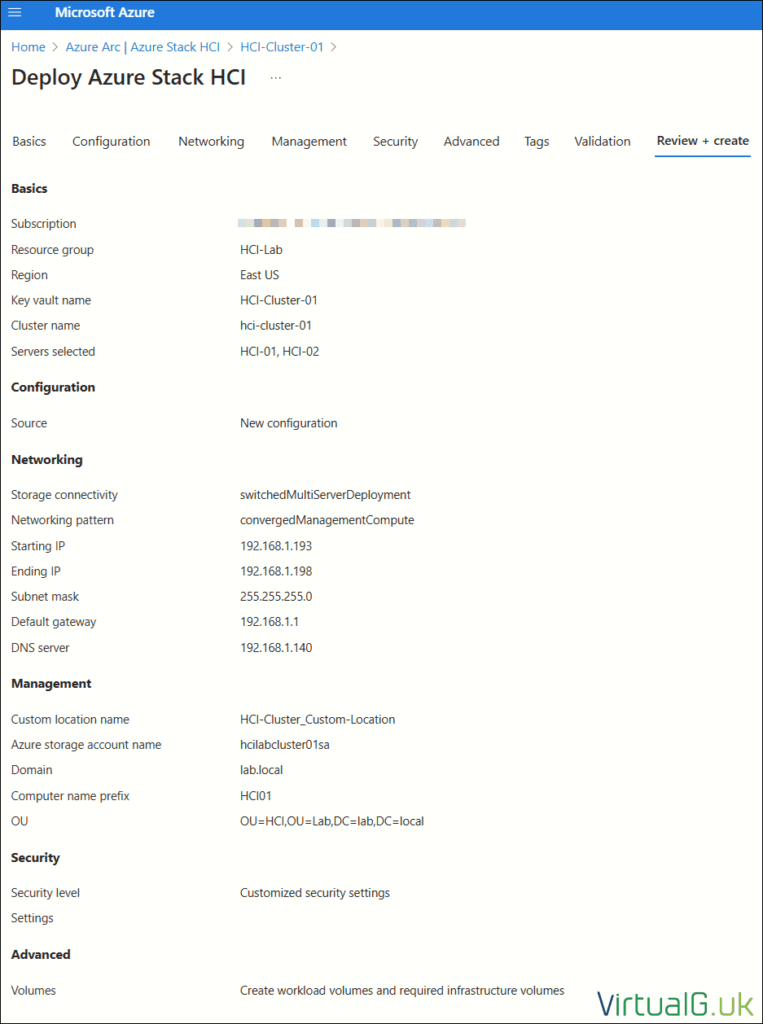

Azure Portal Deployment – Basics

- Ensure the correct Subscription and Resource Group are selected

- Give the cluster a name

- Select a Region

- Create a new Key Vault

- Finally, select all applicable HCI nodes

Once all fields are complete, select Validate Selected Servers

Once validation is successful, select Next: Configuration

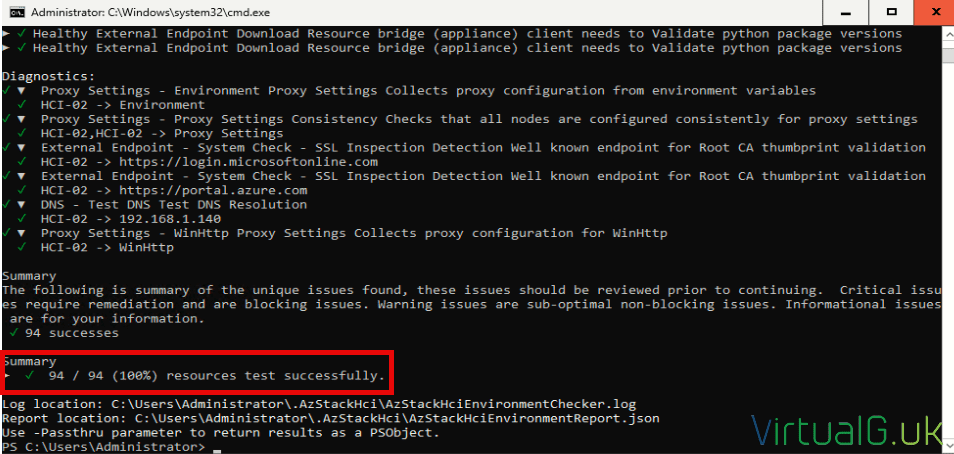

If you encounter any validation errors with regards to connectivity, use the HCI Environment Checker on each node to verify connectivity:

Should you have any errors regarding extensions, you can check the status of them by navigating to your HCI resource group, selecting your HCI node and then Extensions:

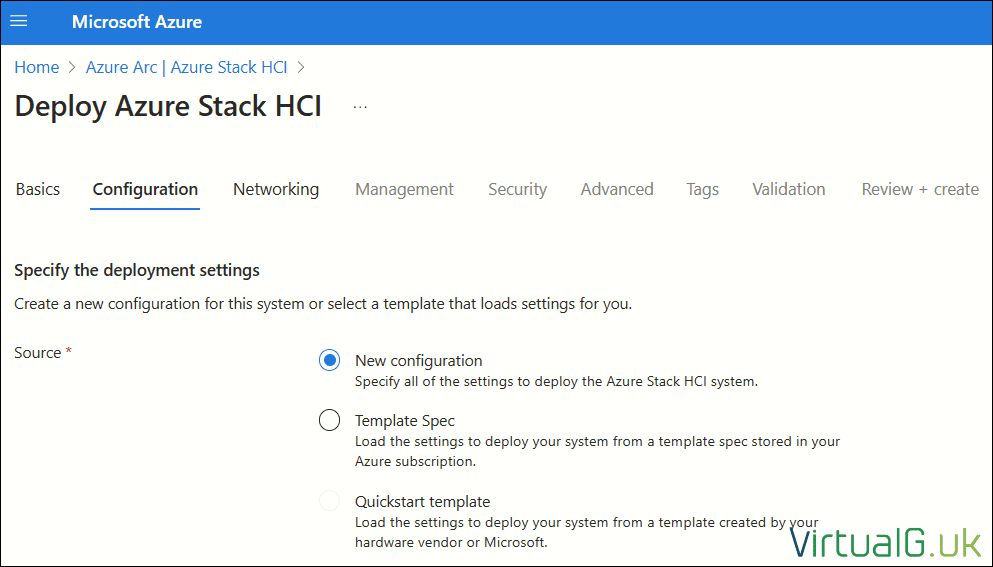

Azure Portal Deployment – Configuration

Since we don’t have an existing template, we’ll enter our configuration for the first time:

Select Next: Networking

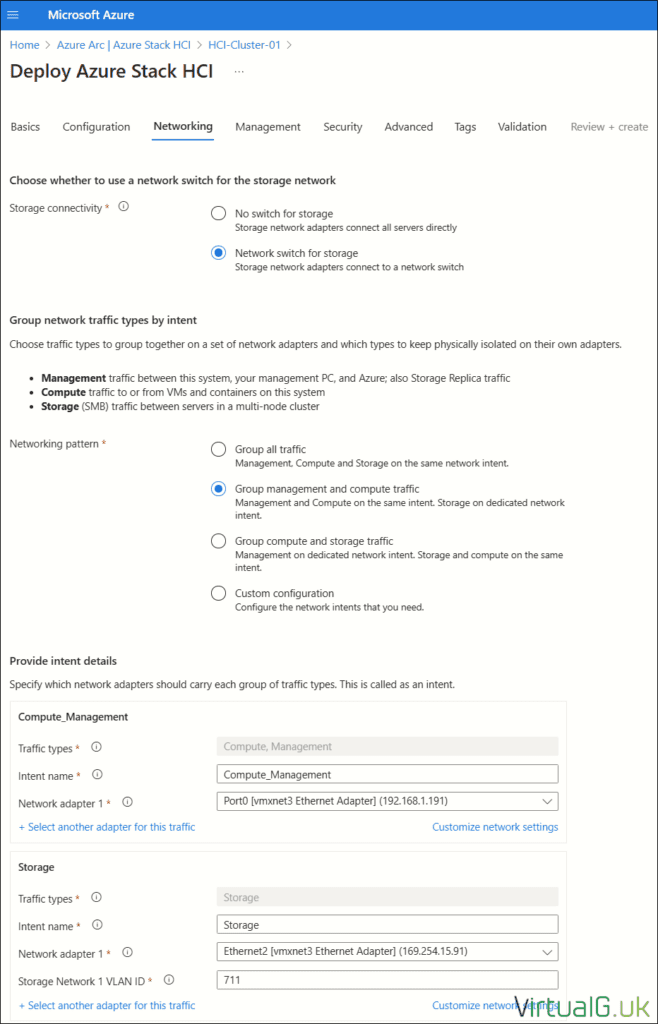

Azure Portal Deployment – Networking

In my lab, I am attempting a very simple deployment topology

Your configuration will likely be different

Storage Connectivity

- Network switch for storage (Storage NICs connect to a network switch)

Networking pattern

- Group all management and compute traffic

Intent Details (Compute and Management)

- Intent Name: Compute_Management

- Network Adapter 1: Port0

Since I’m using virtual machines as my hosts, the NICs do not support RDMA, so I disabled that under the customize network settings option

Intent Details (Storage)

- Intent Name: Storage

- Network Adapter 1: Ethernet2

- Storage Network 1 VLAN ID: 711

Once again, I disabled RDMA under the customize network settings option

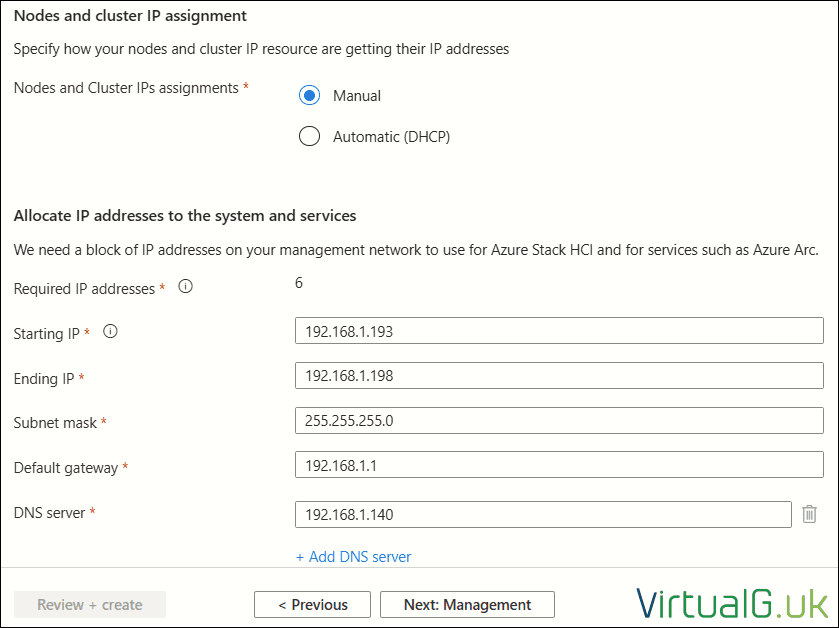

Nodes and cluster IP assignment

- Manual

Allocate IP addresses to the system and services

- Starting IP:

- Ending IP:

- Subnet Mask: 255.255.255.0

- Default Gateway: 192.168.1.1

- DNS Server: 192.168.1.140

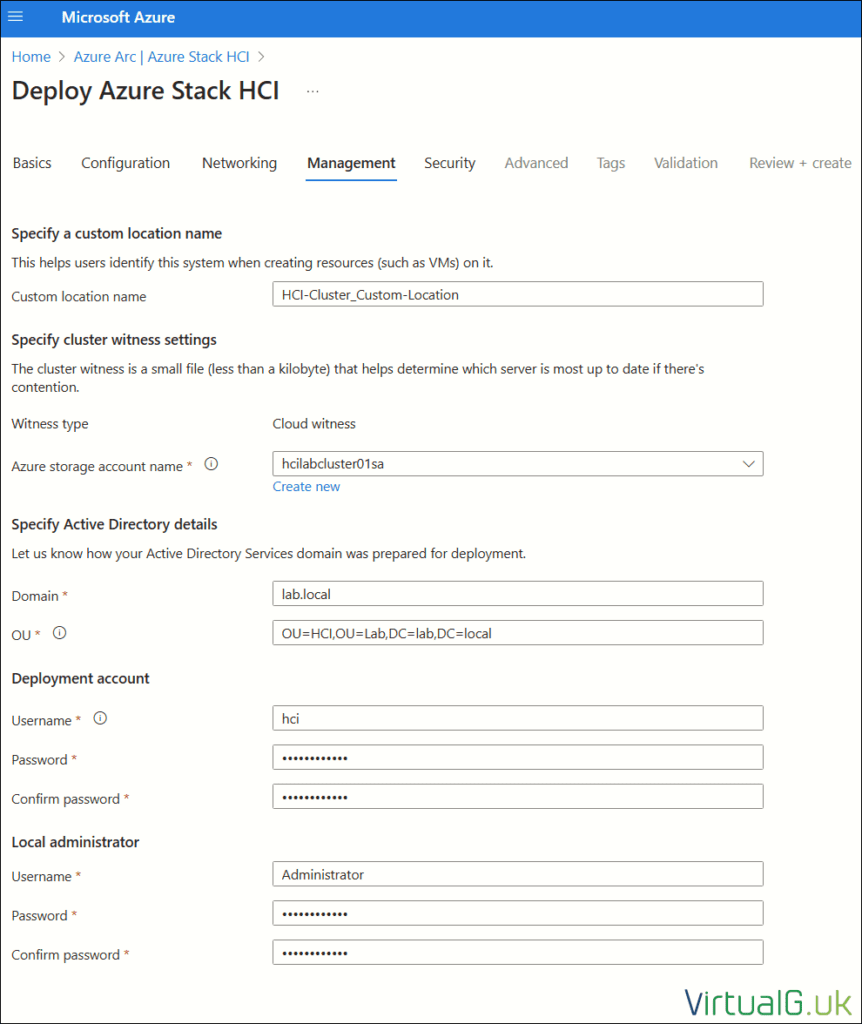

Azure Portal Deployment – Management

You will need to recall the following information you submitted when preparing Active Directory:

- HCI User account (deployment account

- OU for the cluster recourses

- Password for the HCI user

- Local administrator for the servers

Specify a custom location name

- Custom location name: HCI-Cluster_Custom-Location

Specify cluster witness settings

- Azure storage account name (Make new)

Specify Active Directory details

This is the domain and OU created earlier when preparing Active Directory

- Domain: lab.local

- OU: OU=HCI,OU=Lab,DC=lab,DC=local

Deployment account

This is the domain account created earlier when preparing Active Directory

- Username: hci

- Password: ************

Local administrator

This is the local administrator account on each HCI server, they should all be configured with the same password

- Username

- Password

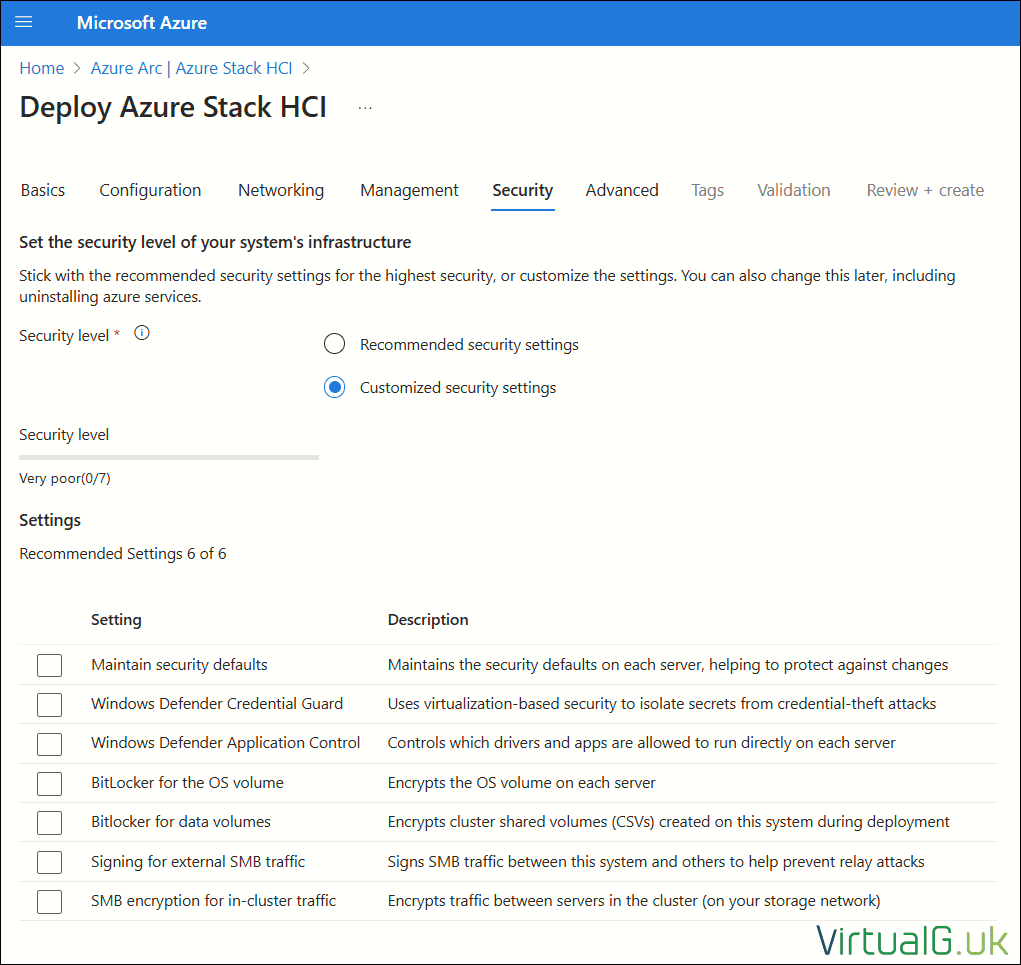

Azure Portal Deployment – Security

For the lab, I disabled all security features since I have limited compute and slow storage for encryption. I just needed to get the system up and running for demonstration purposes. – You would clearly not want to do that for production use-cases.

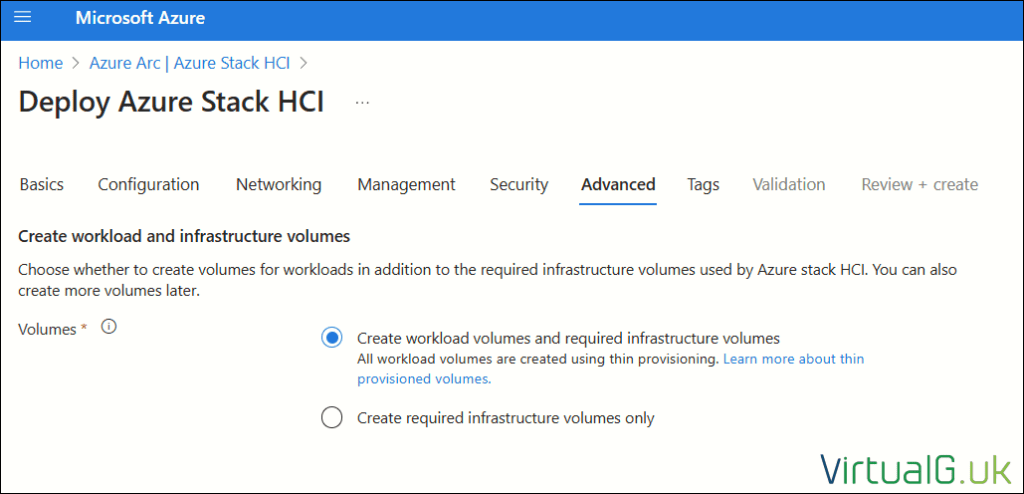

Azure Portal Deployment – Advanced

I only created the infrastructure volumes so I could manually specify the name and settings work workload volumes myself.

Tags

Next you can set tags, which can be useful for organizational / management and/or billing purposes

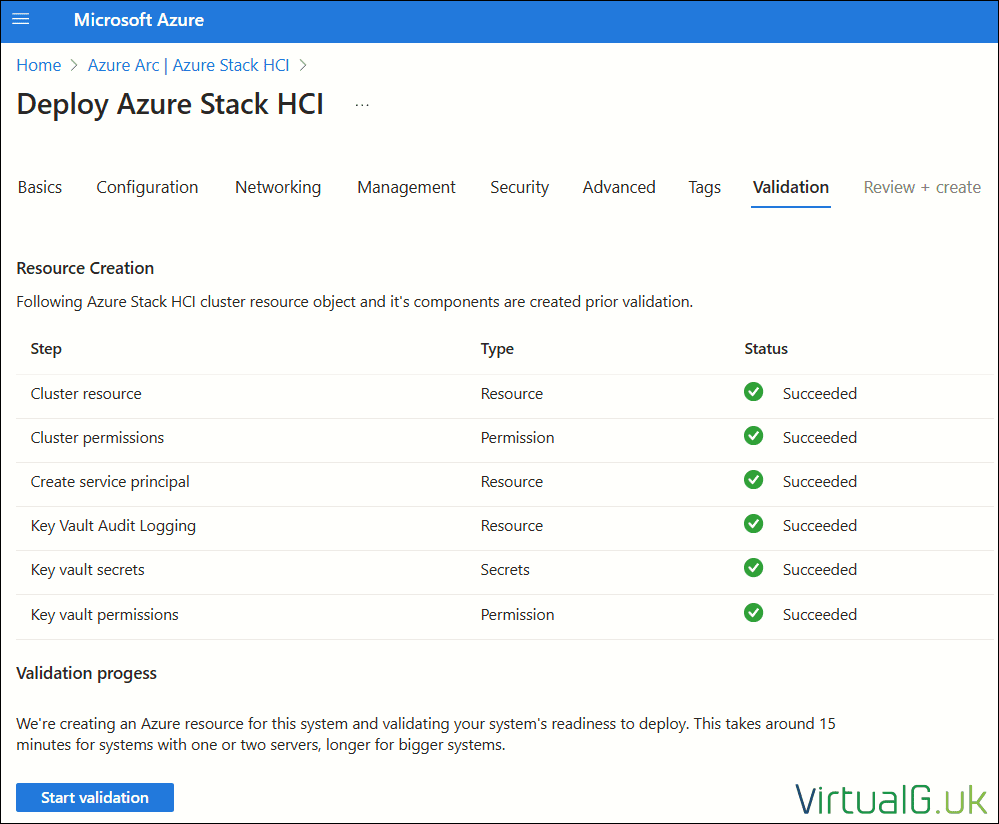

Azure Portal Deployment – Validation

The resource creation will start automatically and the status for each step will change to “Succeeded” once complete.

Once the resource creation is complete, select Start Validation to proceed through the deployment readiness process.

Validation usually takes between 15 minutes and an hour, depending on the size of the cluster

Once complete, the status for each validation task will change from “In progress” to “Success”

Resolve any failures before continuing

Azure Portal Deployment – Review & Create

Review all configuration then hit Create to start the creation of the HCI cluster

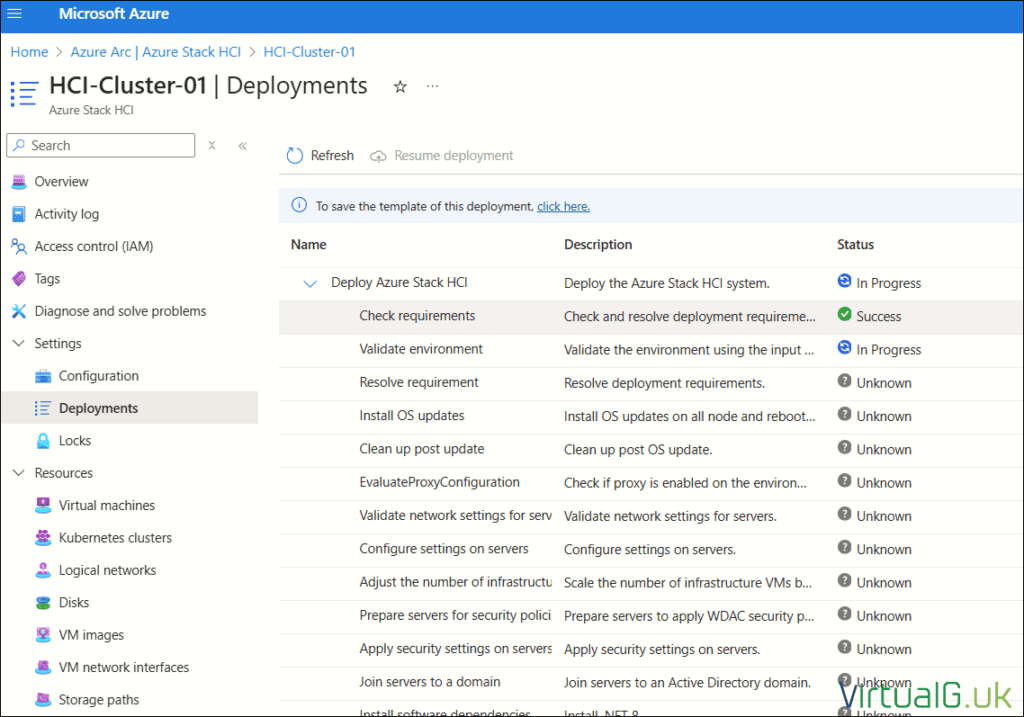

Azure Portal Deployment – Monitoring the Deployment

You can monitor the deployment from the Azure portal:

- Navigate to Azure Stack HCI > Al Clusters

- Select your cluster

- Expand settings on the left pane and then Deployments

- You’ll see all the deployment steps and progress from here

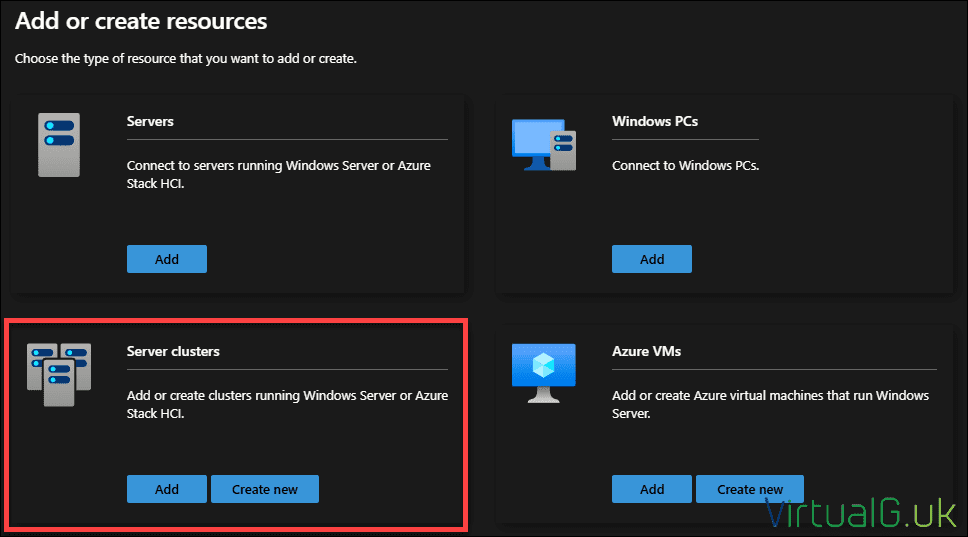

Managing the Azure Stack HCI Cluster

With the cluster successfully deployed, you can now manage it via Azure, Windows Admin Center, PowerShell or any other supported tool.

You can download Windows Admin Center here.

Once WAC is installed, you can add an Azure Stack HCI cluster:

Specify the FQDN of the cluster and then connect.

Once connected, you’ll have access to manage the entire cluster, modify settings, create volumes and manage both virtual machines and Kubernetes.

If you enjoy reading my blog, consider subscribing to my newsletter

I never send out spam and you can unsubscribe at any time

Nice article I’ve been struggling to get Azure Stack HCI installed on VMware workstation for a week.

One thing you didn’t mention that people might run into is that it looks like the TPM validation code checks whether the TPM is valid days is greater than zero, so you may find if you create a new VM the TPM certificate is created and Deploy Cluster fails the validation. I put some notes here:

https://david-homer.blogspot.com/2024/09/solved-azure-hci-stack-deploy-cluster.html

Hi David

Thanks for the information on TPMs. In my vSphere lab, I created virtual TPMs for the VMs on the same day as deployment and I didn’t have any issues.

There was likely a recent change to the function that checks the TPM (These things are being constantly updated in Azure and also in the extensions)

Many thanks for sharing!

Graham